Easy understanding examples to show how to find and drop duplicate rows with Pandas

In data analysis and preprocessing, it’s crucial to identify and handle duplicate rows in a dataset. Duplicate rows can lead to biased analysis, skewed statistics, and erroneous results. Pandas, a powerful Python library for data manipulation, provides several methods to identify and handle duplicate rows effectively. In this tutorial, we will explore some common methods in Pandas to find duplicate rows, accompanied by easy understanding examples.

Create a DataFrame

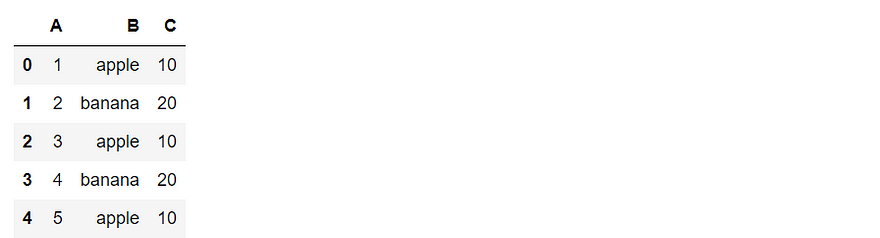

Let’s consider an example where we have a DataFrame called ‘df’ with columns ‘A’, ‘B’, and ‘C’:

import pandas as pd

# Creating a sample DataFrame

data = {'A': [1, 2, 1, 2, 1],

'B': ['apple', 'banana', 'apple', 'banana', 'apple'],

'C': [10, 20, 10, 20, 10]}

df = pd.DataFrame(data)

df

Using ‘duplicated()’ method:

The ‘duplicated()’ method in Pandas helps identify duplicate rows in a DataFrame. It returns a boolean Series indicating whether each row is a duplicate or not.

We can see the duplicate rows clearly because the DataFrame has only 5 rows. In most case, we have a large DataFrame, which usually takes time for us to find the duplicate rows.

# Finding duplicate rows

duplicate_rows = df.duplicated()

duplicate_rows0 False

1 False

2 True

3 True

4 True

dtype: bool

In the output, ‘False’ indicates non-duplicate rows, while ‘True’ represents duplicate rows.

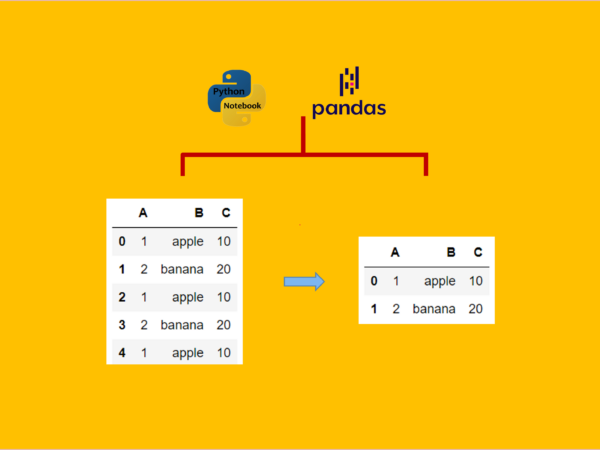

Using the ‘drop_duplicates()’ method:

The ‘drop_duplicates()’ method allows us to remove duplicate rows from a DataFrame. By default, it keeps the first occurrence of the duplicate rows and drops the subsequent ones. Here’s an example:

# Dropping duplicate rows

df_no_duplicates = df.drop_duplicates(inplace=False)

df_no_duplicates

In the output, we can observe that the duplicate rows with index 2, 3 and 4 have been dropped, and the resulting DataFrame contains unique rows. The use of inplace=False tells pandas to return a new DataFrame with duplicates dropped, but you keep the original DataFrame unchanged.

df

However, you can also use inplace=True to change the original DataFrame with duplicates dropped.

df.drop_duplicates(inplace=True)

df

Using the ‘duplicates()’ subset method

In many cases, only some columns are duplicates, which we can use .duplicates(subset=['cols'])` method to remove. Here is an example:

import pandas as pd

# Creating a sample DataFrame

data = {'A': [1, 2, 3, 4, 5],

'B': ['apple', 'banana', 'apple', 'banana', 'apple'],

'C': [10, 20, 10, 20, 10]}

df_new = pd.DataFrame(data)

df_new

# Finding duplicate rows

dup_rows1 = df_new.duplicated()

dup_rows1

In the output, there is no duplicate because not all the columns contain duplicates. In this case, we specify the subset as follows:

# Finding duplicate rows

dup_rows2 = df_new.duplicated(subset=['B','C'])

dup_rows2

Using the ‘drop_duplicates()’ subset method

In this example, we have duplicate items in the columns ‘B’ and ‘C’. We can remove them using .drop_duplicates(subset=['col1','col2'])method to remove. Here is the example:

df_no_duplicates1 = df_new.drop_duplicates(subset=['B'],keep="first", inplace=False)

df_no_duplicates1

Or we can specify subset=['B'] or subset=['B','C'], because duplicates in columns ‘B’ and ‘C’ are on the same rows.

df_no_duplicates2 = df_new.drop_duplicates(subset=['C'],keep="first", inplace=False)

df_no_duplicates2Or

Ordf_no_duplicates3 = df_new.drop_duplicates(subset=['B','C'],keep="first", inplace=False)

df_no_duplicates3We get the same output as above.

By specifying subset=['B'], subset=['C'] or subset=['B','C'], only the values in the related column or columns will be considered when identifying duplicates. Adjusting the subset and keep parameters can help you tailor the behavior of drop_duplicates() according to your specific requirements. In our example, duplicates in columns ‘B’ and ‘C’ are the same, so the results are the same.

Conclusion

Identifying and handling duplicate rows is a vital step in data analysis and preprocessing. In this tutorial, we explored two essential methods in Pandas: duplicated() and drop_duplicates(). The duplicated() method helped us identify duplicate rows by returning a boolean Series, while the drop_duplicates() method enabled us to remove duplicate rows from a DataFrame. These methods can be invaluable in ensuring data integrity and producing accurate analysis results. By utilizing these techniques, you can effectively handle duplicate rows in your datasets and enhance the quality of your data analysis projects.

Originally published at https://medium.com/ on June 29, 2023.