Pandas 2.0 has more efficient way to handle and process large data with PyArrow

Pandas was initially built on NumPy, which has made Pandas the popular library. NumPy is an essential library for anyone working with numerical data in Python, providing a foundation for scientific computing, data analysis, and machine learning applications. However, it does have some limitations when it comes to handling large data due to memory limitations, limited support for out-of-core computing, limited support for parallel processing, etc.

Pandas 2.0.0 was officially released on April 2, 2023, which is a major release from the pandas 1 series. There are many changes and updates in the Panadas 2.0, and one of major update is the new Apache Arrow backend for pandas data.

Pandas 2.0 integrates Arrow to improve the performance and scalability of data processing. Arrow is an open-source software library for in-memory data processing that provides a standardized way to represent and manipulate data across different programming languages and platforms.

By integrating Arrow, Pandas 2.0 is able to take advantage of the efficient memory handling and data serialization capabilities of Arrow. This allows Pandas to process and manipulate large datasets more quickly and efficiently than before.

Some of the key benefits of integrating Arrow with Pandas include:

- Improved performance: Arrow provides a more efficient way to handle and process data, resulting in faster computation times and reduced memory usage.

- Scalability: Arrow’s standardized data format allows for easy integration with other data processing tools, making it easier to scale data processing pipelines.

- Interoperability: Arrow provides a common data format that can be used across different programming languages and platforms, making it easier to share data between different systems.

Overall, integrating Arrow with Pandas 2.0 provides a significant performance boost and improves the scalability and interoperability of data processing workflows.

Install Pandas 2.0

You should install Pandas 2.0 or its updated version if you still use a previous version. The release can be installed from PyPI:

pip install — upgrade pandas>=2.0.0Or from conda-forge

conda install -c conda-forge pandas>=2.0.0for mamba user:

mamba install -c conda-forge pandas>=2.0.0Install PyArrow

Install the latest version of PyArrow from conda-forge using Conda:

conda install -c conda-forge pyarrowInstall the latest version from PyPI:

pip install pyarrowfor namba user:

Import Required Libraries

First, let’s import required libraries. Besides pandas, we also need time to calculate programming running time.

import pandas as pd

import timeRead with Pandas Default NumPy Backend

We use Pandas default numPy backend to read the ‘yellow_tripdata_2019–01.csv’, a large dataset that can be downloaded from Kaggle.

start = time.time()

pd_df = pd.read_csv("./data/yellow_tripdata_2019-01.csv")

end = time.time()

pd_duration = end - start

print("Time to read data with pandas: {} seconds".format(round(pd_duration, 3)))Time to read data with pandas: 13.185 seconds

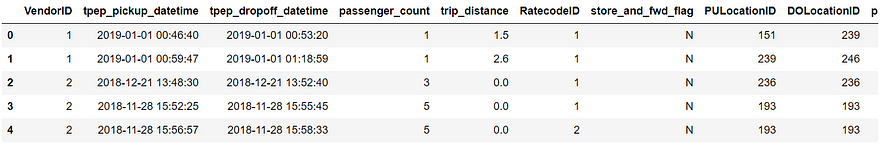

pd_df.head()Part of the result is as follows:

pd_df.shapeThe result:(7667792, 18)

Thus, the dataset has 7667792 rows and 18 columns.

pd_df.dtypesVendorID int64

tpep_pickup_datetime object

tpep_dropoff_datetime object

passenger_count int64

trip_distance float64

RatecodeID int64

store_and_fwd_flag object

PULocationID int64

DOLocationID int64

payment_type int64

fare_amount float64

extra float64

mta_tax float64

tip_amount float64

tolls_amount float64

improvement_surcharge float64

total_amount float64

congestion_surcharge float64

dtype: objectRead Data with PyArrow Engine

Next, we check how it is when we read the dataset with PyArrow Engine.

start = time.time()

arrow_eng_df = pd.read_csv("./data/yellow_tripdata_2019-01.csv", engine='pyarrow')

end = time.time()

arrow_eng_duration = end -start

print("Time to read data with pandas pyarrow engine: {} seconds".format(round(arrow_eng_duration, 3)))Time to read data with pandas pyarrow engine: 2.591 seconds

It only takes 2.591 seconds, which is much faster than that of Pandas default NumPy backend.

arrow_eng_df.dtypesVendorID int64

tpep_pickup_datetime datetime64[ns]

tpep_dropoff_datetime datetime64[ns]

passenger_count int64

trip_distance float64

RatecodeID int64

store_and_fwd_flag object

PULocationID int64

DOLocationID int64

payment_type int64

fare_amount float64

extra float64

mta_tax float64

tip_amount float64

tolls_amount float64

improvement_surcharge float64

total_amount float64

congestion_surcharge float64

dtype: objectIf you compare the data types, there is also some different, for example, ‘tpep_pickup_datetime’ and ‘tpep_dropoff_datetime’ are ‘datetime64[ns]

datetime64[ns]’.

Read Data with PyArrow Backend

Next, let’s see how the situation is going when we read the dataset using PyArrow backend.

start = time.time()

df_pyarrow_type = pd.read_csv("./data/yellow_tripdata_2019-01.csv", dtype_backend="pyarrow",low_memory=False)

end = time.time()

df_pyarrow_type_duration = end -start

print("Time to read data with pandas pyarrow datatype backend: {} seconds".format(round(df_pyarrow_type_duration, 3)))Time to read data with pandas pyarrow datatype backend: 24.407 seconds

However, this method takes much larger than the previous two methods.

df_pyarrow_type.dtypesVendorID int64[pyarrow]

tpep_pickup_datetime string[pyarrow]

tpep_dropoff_datetime string[pyarrow]

passenger_count int64[pyarrow]

trip_distance double[pyarrow]

RatecodeID int64[pyarrow]

store_and_fwd_flag string[pyarrow]

PULocationID int64[pyarrow]

DOLocationID int64[pyarrow]

payment_type int64[pyarrow]

fare_amount double[pyarrow]

extra double[pyarrow]

mta_tax double[pyarrow]

tip_amount double[pyarrow]

tolls_amount double[pyarrow]

improvement_surcharge double[pyarrow]

total_amount double[pyarrow]

congestion_surcharge double[pyarrow]

dtype: objectRead Data with PyArrow Engine and Backend

Now we test the speed using engine='pyarrow' and dtype_backend="pyarrow".

start = time.time()

df_pyarrow = pd.read_csv(“./data/yellow_tripdata_2019–01.csv”,engine=’pyarrow’, dtype_backend=”pyarrow”)

end = time.time()

df_pyarrow_duration = end -start

print(“Time to read data with pandas pyarrow engine and datatype: {} seconds”.format(round(df_pyarrow_duration, 3)))Time to read data with pandas pyarrow engine and datatype: 2.409 seconds

It only takes 2.409 seconds, which is very fast.

Copy-on-Write (CoW) Improvements

Copy-on-Write (CoW) is a technique first introduced in Pandas 1.5.0 to optimize memory usage when copying data. With CoW, when data is copied, the copy only contains pointers to the original data, rather than a full copy of the data itself. This can save memory and improve performance, as the original data can be shared between multiple copies until one of the copies is modified.

In Pandas 2.0, there have been several improvements to CoW that further optimize memory usage and performance, especially when working with smaller datasets or individual columns of data.

pd.options.mode.copy_on_write = True

start = time.time()

df_copy = pd.read_csv("./data/yellow_tripdata_2019-01.csv")

end = time.time()

df_copy_duration = end -start

print("Time to read data with pandas copy on write: {} seconds".format(round(df_copy_duration, 3)))Time to read data with pandas copy on write: 17.187 seconds

Conclusion

Pandas 2.0 can greatly increase the speed of date processing. Compared with Pandas default NumPy backend, PyArrow Engine, PyArrow Backend, both PyArrow Engine and PyArrow Backend, it shows that using combination of PyArrow Engine and PyArrow backend has the fastest speed, and using PyArrow Engine also significantly speeds up data handling process.

Originally published at https://medium.com/ on June 26, 2023.